As technology continues to reshape industries, the news media faces significant transformations. Over the next five years, artificial intelligence (AI) and automation will redefine newsroom workflows, enhancing efficiency, reducing costs, and enabling more targeted audience engagement. However, news organisations will have to balance the potential of these technologies with the need to maintain editorial integrity and public trust.

AI's Role in Automating Routine Tasks

AI’s immediate impact in newsrooms will be in automating routine tasks like transcription, content tagging, and basic content creation. The Reuters Institute for the Study of Journalism 2024 Trends and Predictions reports that automating workflows such as research and copyediting can deliver much-needed efficiency gains, especially as traditional revenue streams face increasing pressure. AI will free journalists from mundane tasks, allowing them to concentrate on more valuable work like investigative reporting and in-depth analysis.

For example, The Washington Post has implemented AI through its in-house tool Heliograf, which automates the creation of short news stories, such as election results and sports updates. In 2023 it announced the establishment of two cross-functional teams to drive innovation: an AI Taskforce and an AI Hub.

The Associated Press (AP) has expanded its use of AI to automate the production of earnings reports and local news stories. This automation allows AP to produce thousands of stories annually, freeing journalists to focus on more complex reporting

AI in Research and Personalisation

Beyond automation, AI will play a significant role in improving research processes and personalising content. Nic Newman’s Reuters Institute report suggests that AI will enhance content recommendations and help tailor news to individual user preferences. Personalisation could help address news avoidance by providing readers with content that aligns more closely with their interests.

One example of this is The Times of London, which uses AI algorithms to personalise newsletters for its subscribers. This initiative uses data on reading habits to send targeted articles that resonate with individual users, boosting engagement and subscription retention. As audiences increasingly expect content that caters to their specific needs, AI tools are becoming central to helping news outlets meet these expectations.

AI tools are expected to be increasingly integrated into existing services as public attitudes evolve. Rasmus Kleis Nielsen predicts that, over time, AI may become as invisible as search engine algorithms, with users barely noticing its presence. While this integration may make news more accessible, human oversight will remain essential to ensure content quality and uphold ethical standards.

Maintaining Trust Through Human Oversight

The use of AI in journalism raises ethical concerns, particularly in terms of trust and transparency. Research shows that the public is cautious about AI-generated content, preferring AI to be used primarily behind the scenes. Audiences expect human oversight, especially for stories involving sensitive or complex topics, and believe final editorial decisions should rest with journalists. Transparency is vital—any AI-generated content must be clearly labelled to maintain trust with readers.

AI and Multimedia Production

Journalism's future extends beyond text. AI is already making significant strides in video and audio production. The Reuters Institute notes that AI tools are streamlining video editing processes, making production more efficient by automating time-consuming tasks. This allows newsrooms, including smaller outlets, to produce high-quality multimedia content more affordably.

The Trends and Predictions 2024 report suggests that publishers will increasingly focus on video, newsletters, and podcasts to engage their audiences.

The Risks of Over-Reliance on AI

Despite its benefits, AI presents risks, particularly when it comes to content creation. Over-reliance on AI could harm a news organisation’s reputation if AI-generated content contains errors or biases. The 2024 Predictions Report warns that the use of generative AI in content production poses a reputational risk. Human oversight is critical to ensuring that AI-generated content meets accuracy and impartiality standards.

Moreover, AI should not replace human creativity. While automation can enhance efficiency, it must be implemented cautiously to avoid diminishing the unique value that journalists bring to their work.

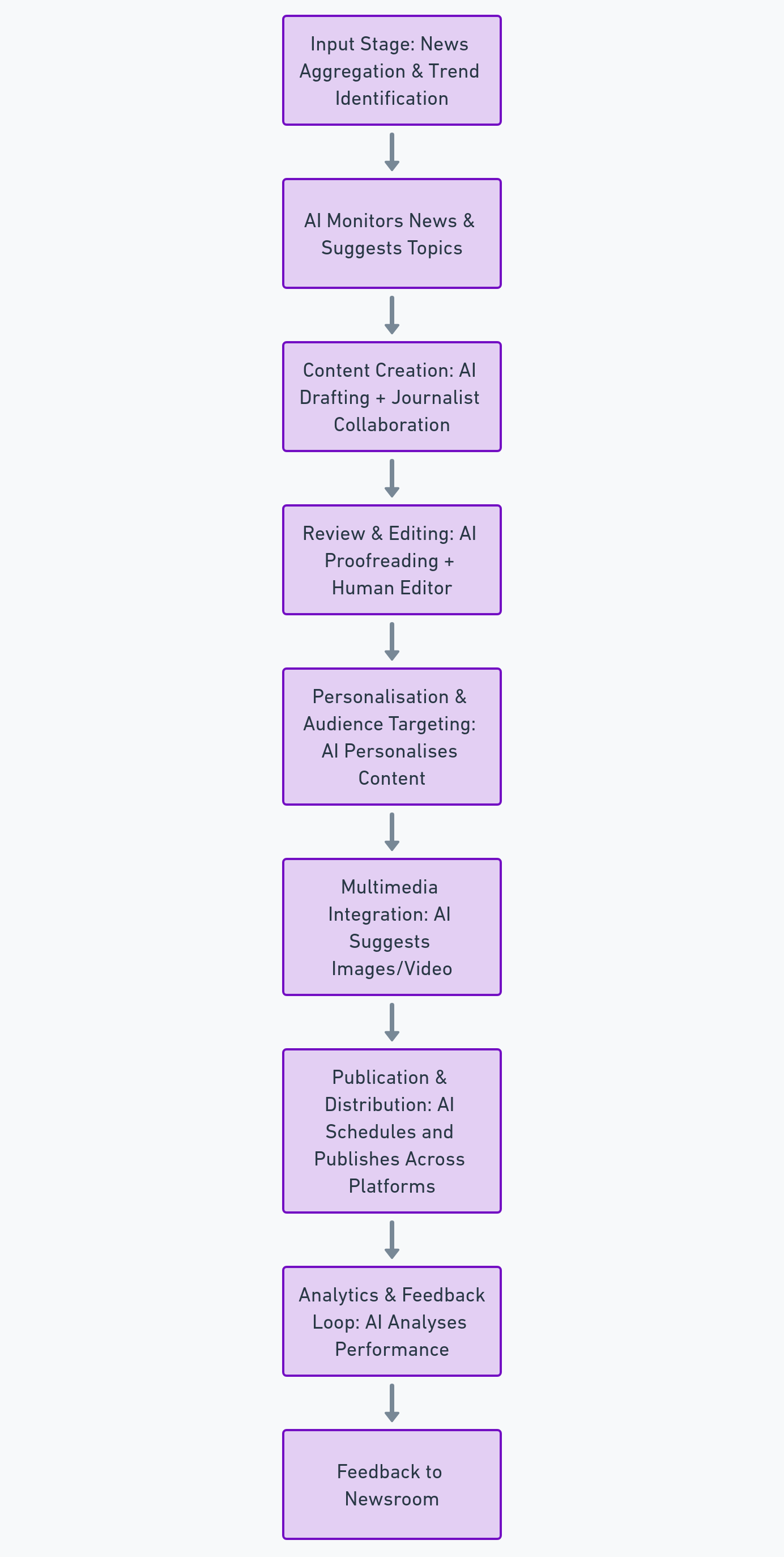

Proposed AI-Driven Newsroom Workflow: A Step-by-Step Guide

Story Identification & Research (Automated with AI Assistance)

AI tools like news aggregators, trend analysis platforms, and social media monitoring systems track trending topics and breaking news. Journalists receive alerts on potential stories, curated based on audience data and relevance. AI assists in gathering background information, sourcing facts, and referencing past reports.Initial Content Drafting (Human-AI Collaboration)

For routine topics like sports scores or market updates, generative AI drafts initial versions. For more complex stories, AI provides outlines, while journalists lead on in-depth analysis and interviews. The draft is formatted to fit the publication’s style, streamlining the layout process. A prime example is Bloomberg’s Cyborg system, which automates the creation of financial reports. The Cyborg system processes vast amounts of financial data to generate thousands of stories per year, handling routine content quickly while freeing journalists to focus on analysis and interpretation.Review and Editing (Human Oversight)

AI tools assist with grammar checks and style consistency, while journalists focus on fact-checking and refining the tone, especially for sensitive topics. AI ensures metadata, headlines, and SEO tags are optimised.Personalisation & Audience Targeting (AI-Driven)

AI algorithms personalise content for different audience segments. For example, shorter versions may be tailored for mobile readers, while in-depth analyses target subscribers. Localised or demographically relevant versions enhance the article’s impact. Bertie, the AI-powered tool used by Forbes, assists journalists by providing real-time data insights, suggesting article topics, and even offering headline recommendations based on engagement data. This use of AI has helped Forbes maintain high-quality content while increasing output.Multimedia Integration (AI-Assisted)

AI suggests relevant images, videos, and infographics to accompany articles. Video editing software automatically assembles footage to align with the content. Journalists and designers approve the final multimedia elements, ensuring alignment with editorial standards.Publication and Distribution (Automated and Optimised)

AI systems schedule and publish content across multiple platforms, including websites, apps, and social media. Real-time engagement monitoring allows AI to adjust promotion strategies, focusing on platforms where the content is performing best.Analytics and Continuous Feedback (AI-Driven Insights)

After publication, AI analytics tools provide insights into reader engagement, such as time spent on articles and social media shares. These insights feed back into the editorial process, helping to refine future content strategies.

Balancing Efficiency, Ethics, and the Future of Journalism

As AI becomes more integrated into newsroom workflows, news organisations must carefully balance the benefits of automation with the need to uphold core journalistic principles of accuracy, fairness, and trustworthiness. While AI offers opportunities to improve efficiency and personalise content, it also introduces challenges that cannot be overlooked.

AI-generated biases are a concern, as algorithms may perpetuate existing prejudices. To address this, news organisations are increasingly focusing on transparency, human oversight, and auditing AI outputs for fairness. Job impacts must also be considered, with AI supporting journalists in routine tasks but never replacing the critical thinking and creativity that define the profession. Meanwhile, AI can assist in combating misinformation, though human fact-checking remains crucial to maintaining the integrity of the news.

In the end, the future success of newsrooms will depend on their ability to harness AI in ways that enhance journalism while preserving its values.

Post-Script

This blog was created primarily using AI, through careful prompt engineering and analysis of relevant documents. I have trained a Custom GPT to mimic my writing style, I have trained a Custom GPT on logic and problem-solving. I used Grammarly and Quillbot to quality-control the writing, and I used Claude, Gemini and Google to fact check. The image was created in ChatGPT, as was the diagramme. However, the AI did not do the thinking—I did. The role of AI was to assist, not replace, human judgment. If we accept that the point of writing is to communicate efficiently, the real test of this piece should be whether it effectively achieved that objective. Welcome to the new normal.